Underwater Monocular Depth Estimation (UMDE) is a critical task that aims to estimate high-precision depth maps from underwater degraded images caused by light absorption and scattering effects in marine environments. Recently, Mamba-based methods have achieved promising performance across various vision tasks; however, they struggle with the UMDE task because their inflexible state scanning strategies fail to model the structural features of underwater images effectively. Meanwhile, existing UMDE datasets usually contain unreliable depth labels, leading to incorrect object-depth relationships between underwater images and their corresponding depth maps. To overcome these limitations, we develop a novel tree-aware Mamba method, dubbed Tree-Mamba, for estimating accurate monocular depth maps from underwater degraded images. Specifically, we propose a tree-aware scanning strategy that adaptively constructs a minimum spanning tree based on feature similarity. The spatial topological features among the tree nodes are then flexibly aggregated through bottom-up and top-down traversals, enabling stronger multi-scale feature representation capabilities. Moreover, we construct an underwater depth estimation benchmark (called BlueDepth), which consists of 38,162 underwater image pairs with reliable depth labels. This benchmark serves as a foundational dataset for training existing deep learning-based UMDE methods to learn accurate object-depth relationships. Extensive experiments demonstrate the superiority of the proposed Tree-Mamba over several leading methods in both qualitative results and quantitative evaluations with competitive computational efficiency.

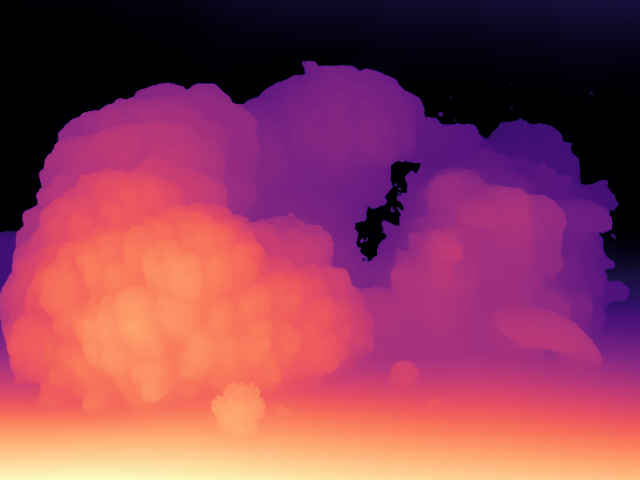

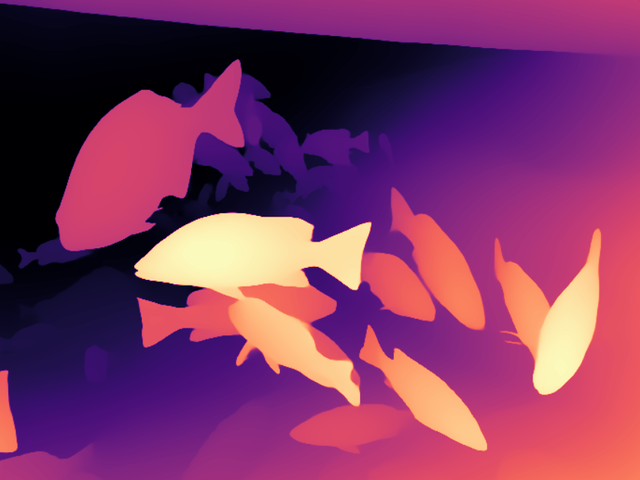

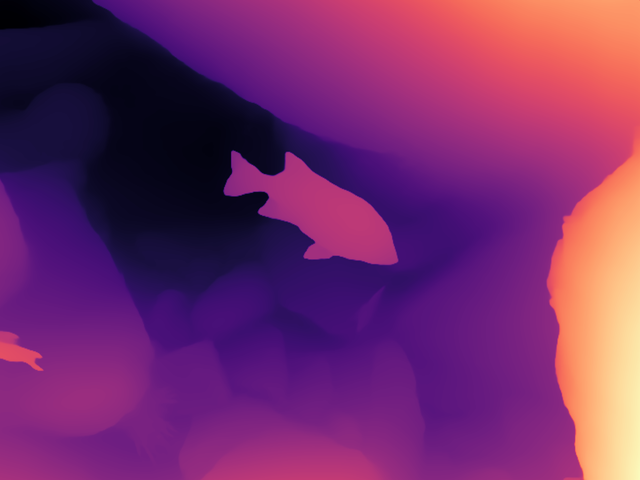

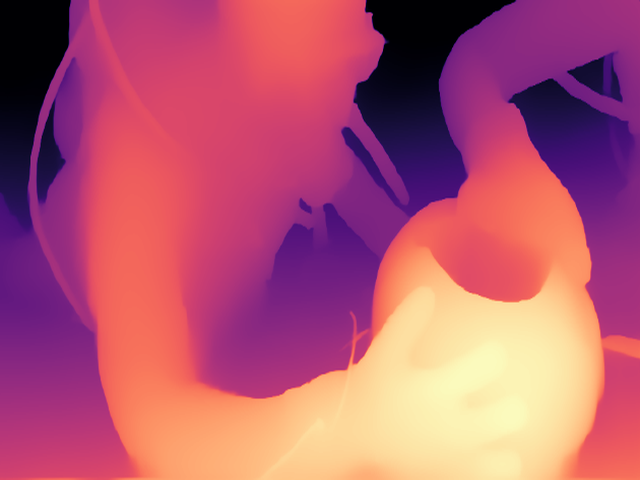

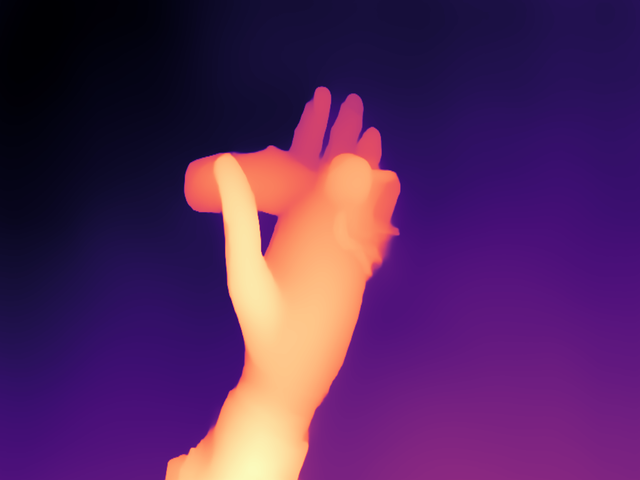

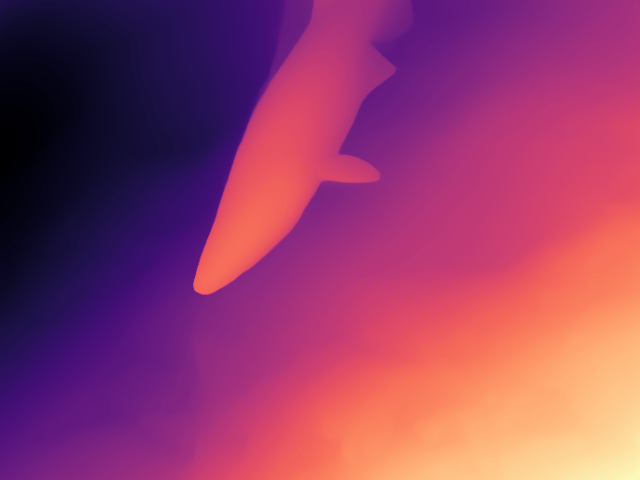

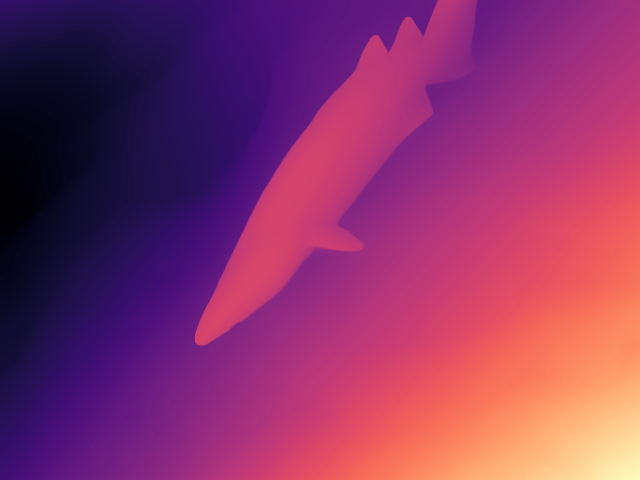

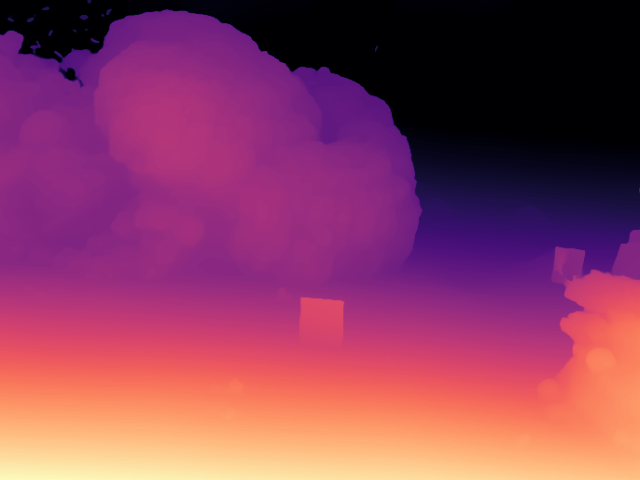

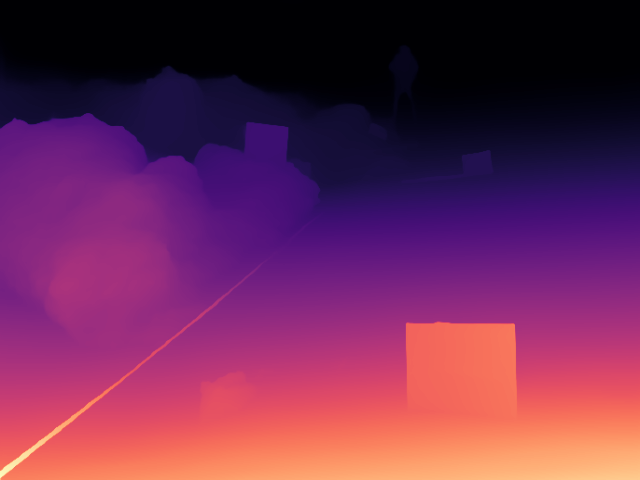

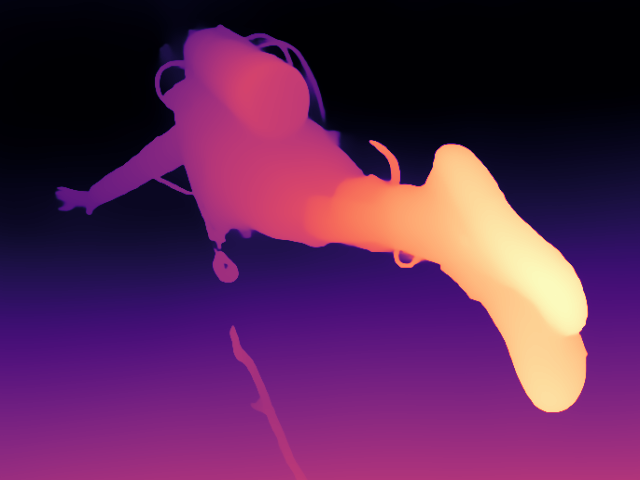

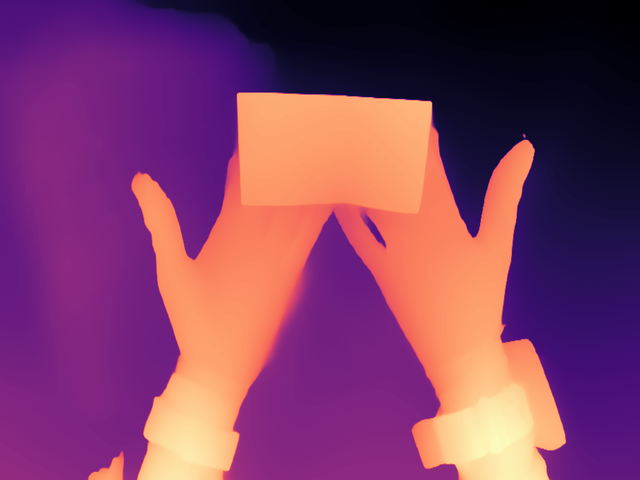

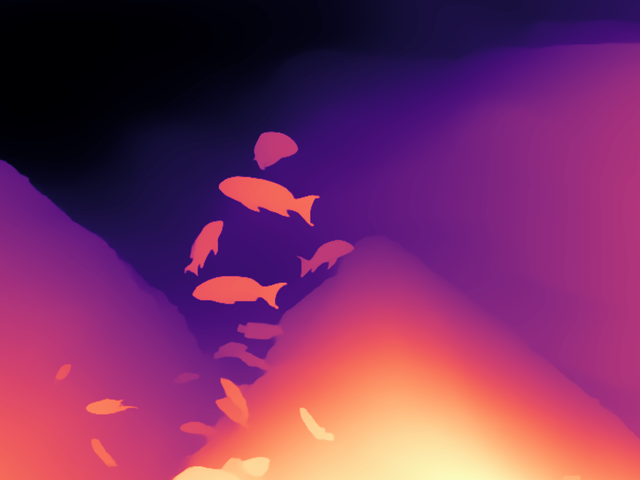

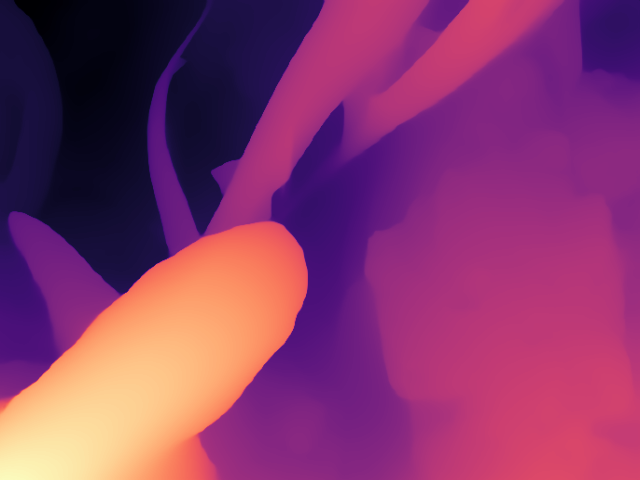

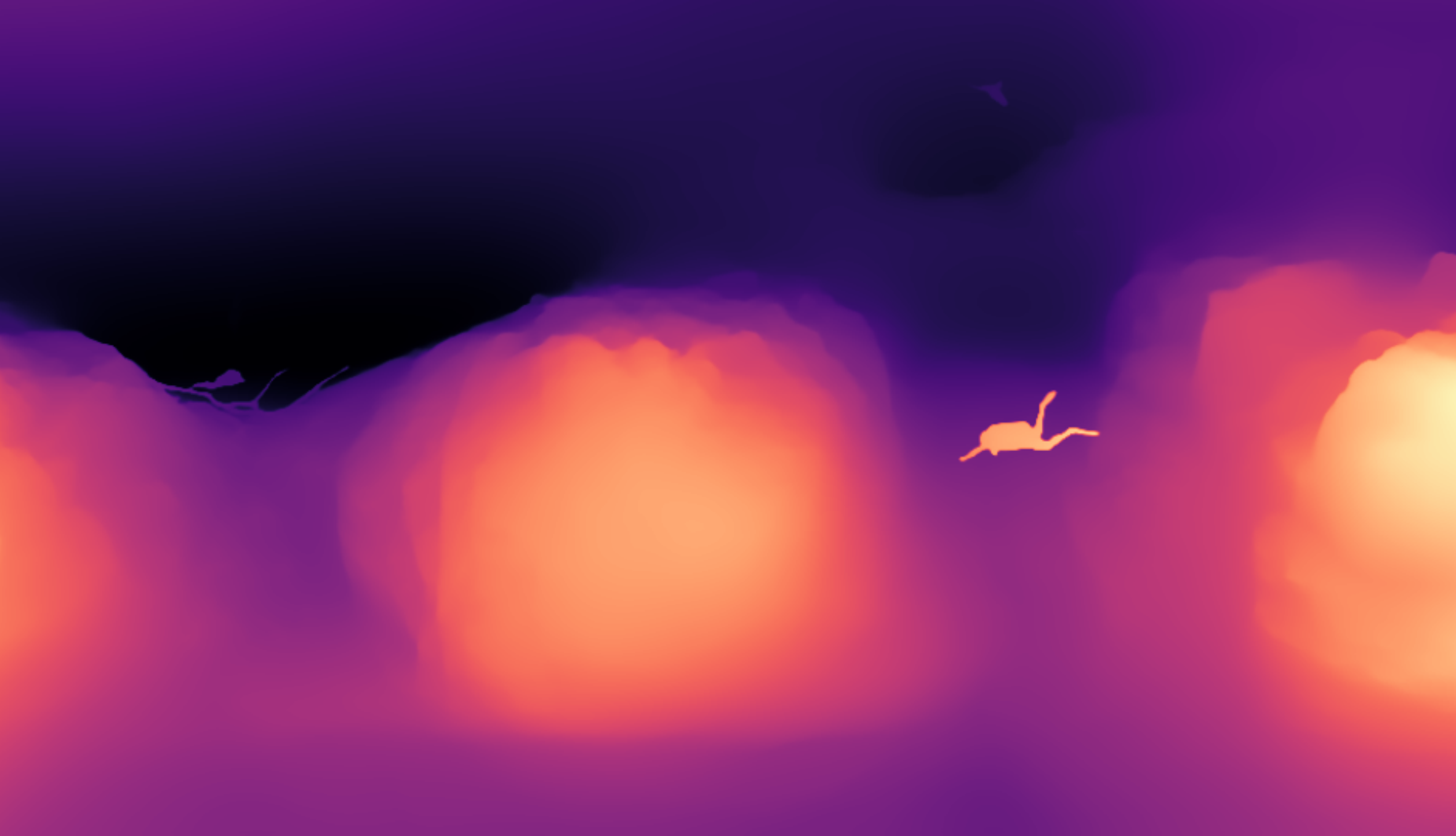

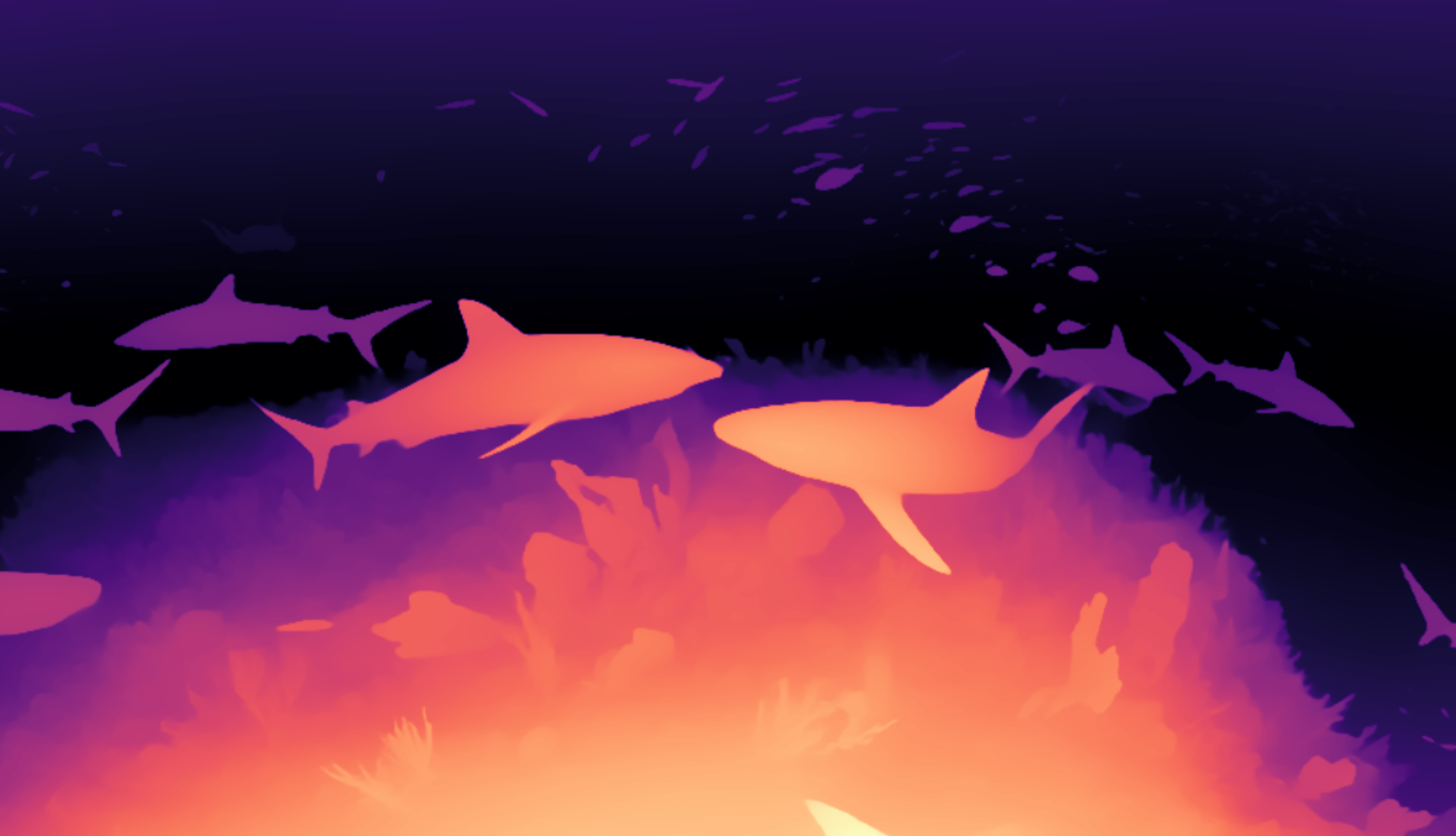

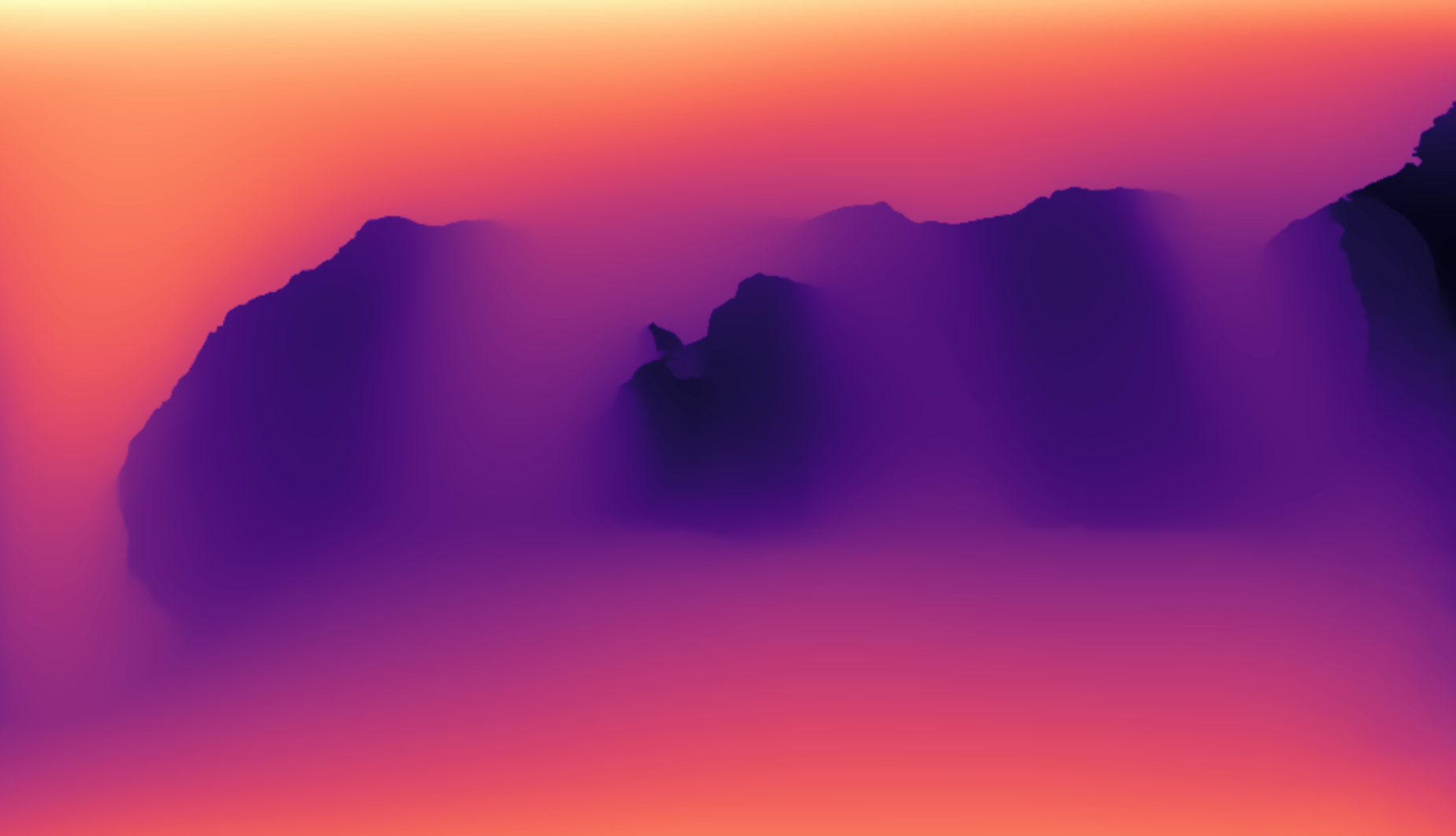

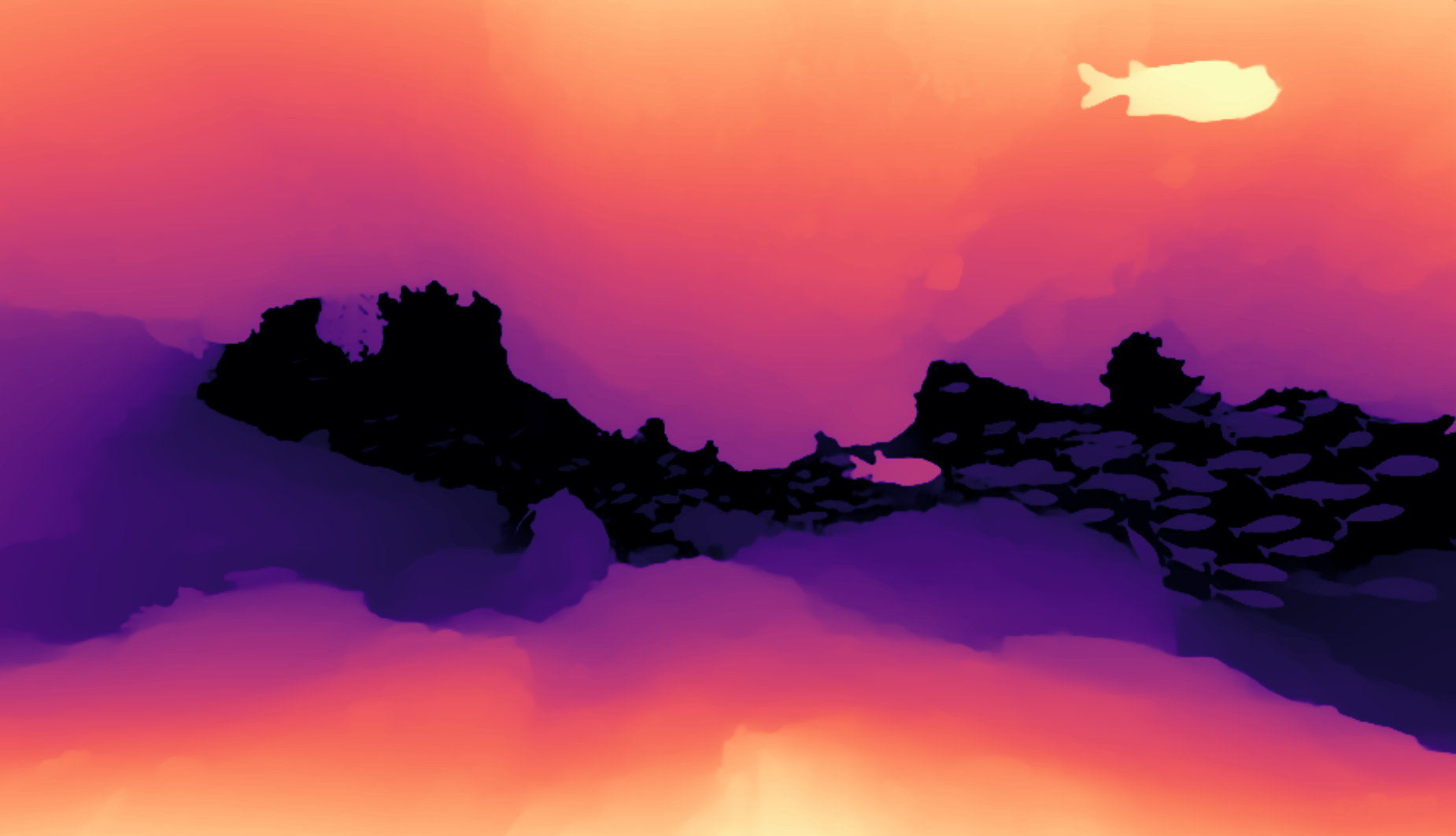

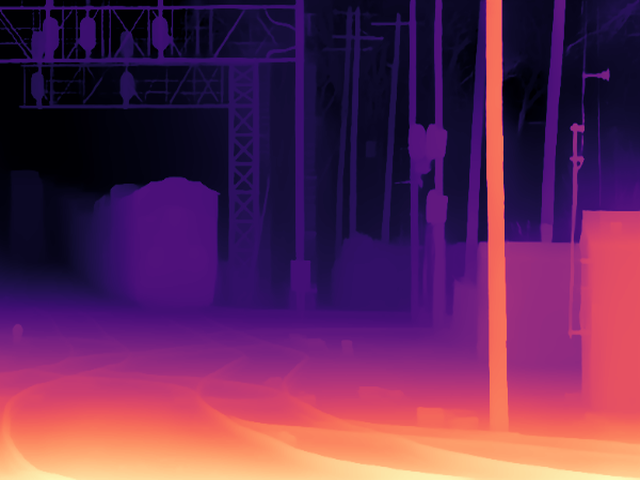

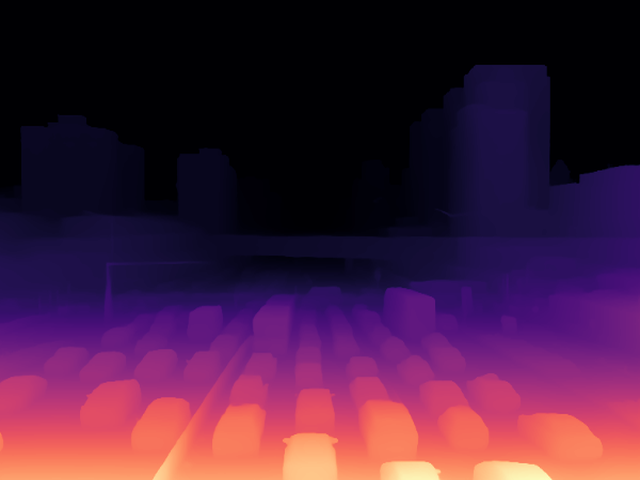

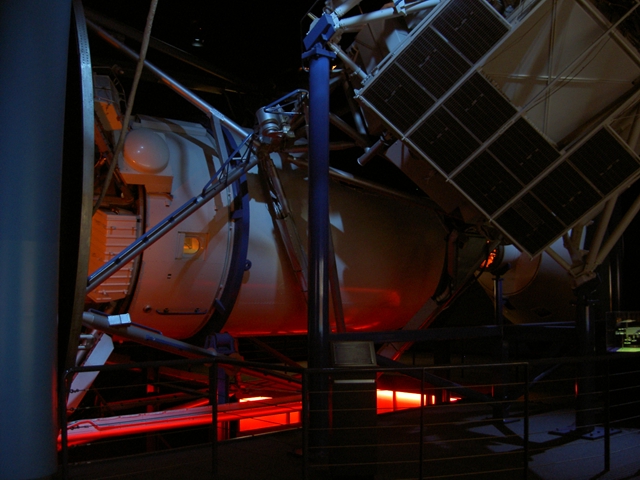

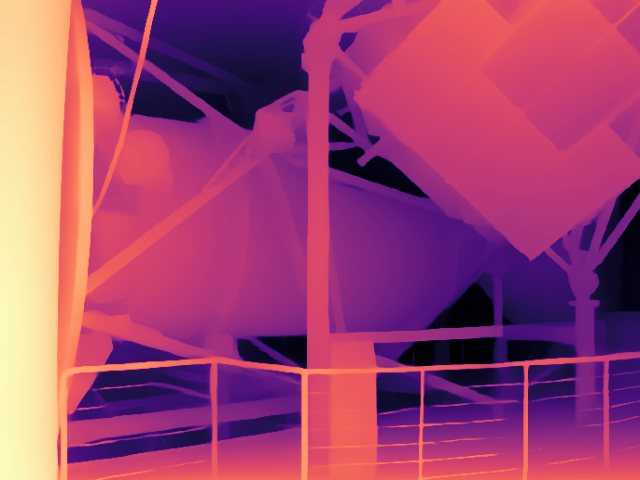

Underwater videos predicted by our Tree-Mamba method. The left half shows raw underwater videos, while the right half displays predicted depth results. The predicted depth results exhibit noticeable consistency across frames, which highlights the scalability of our Tree-Mamba.

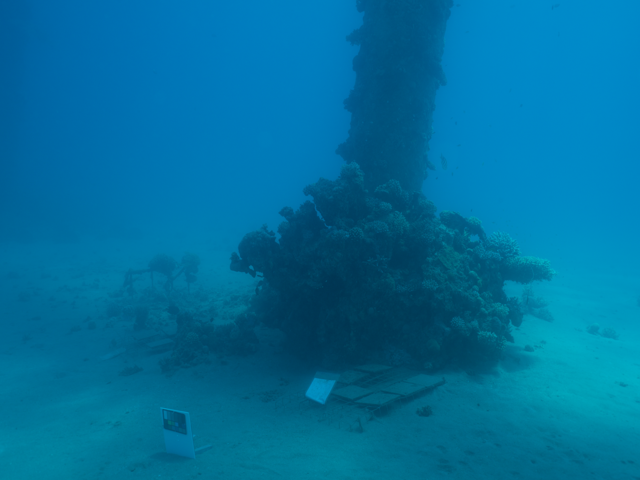

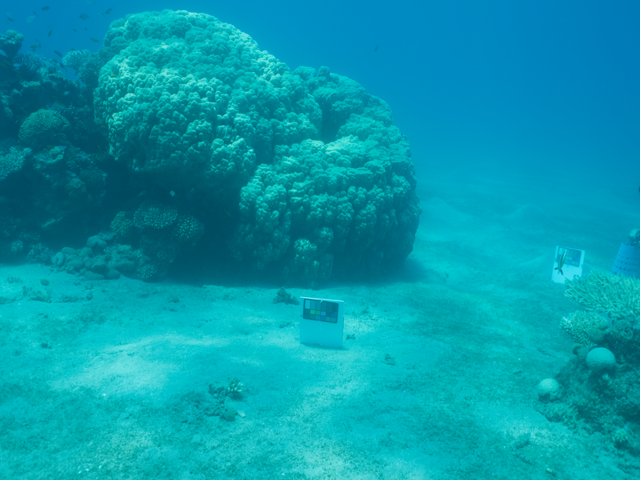

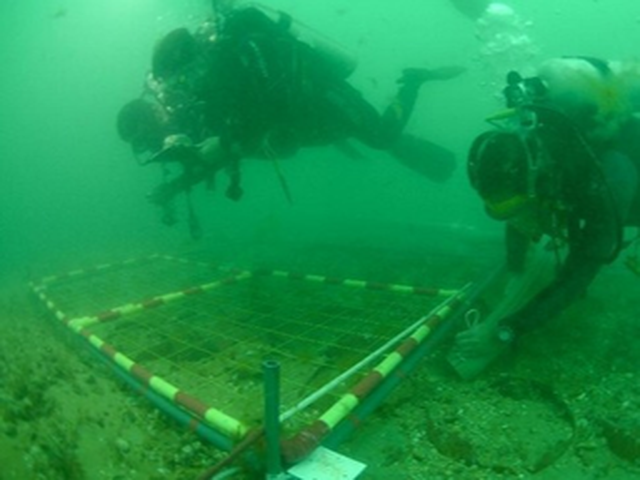

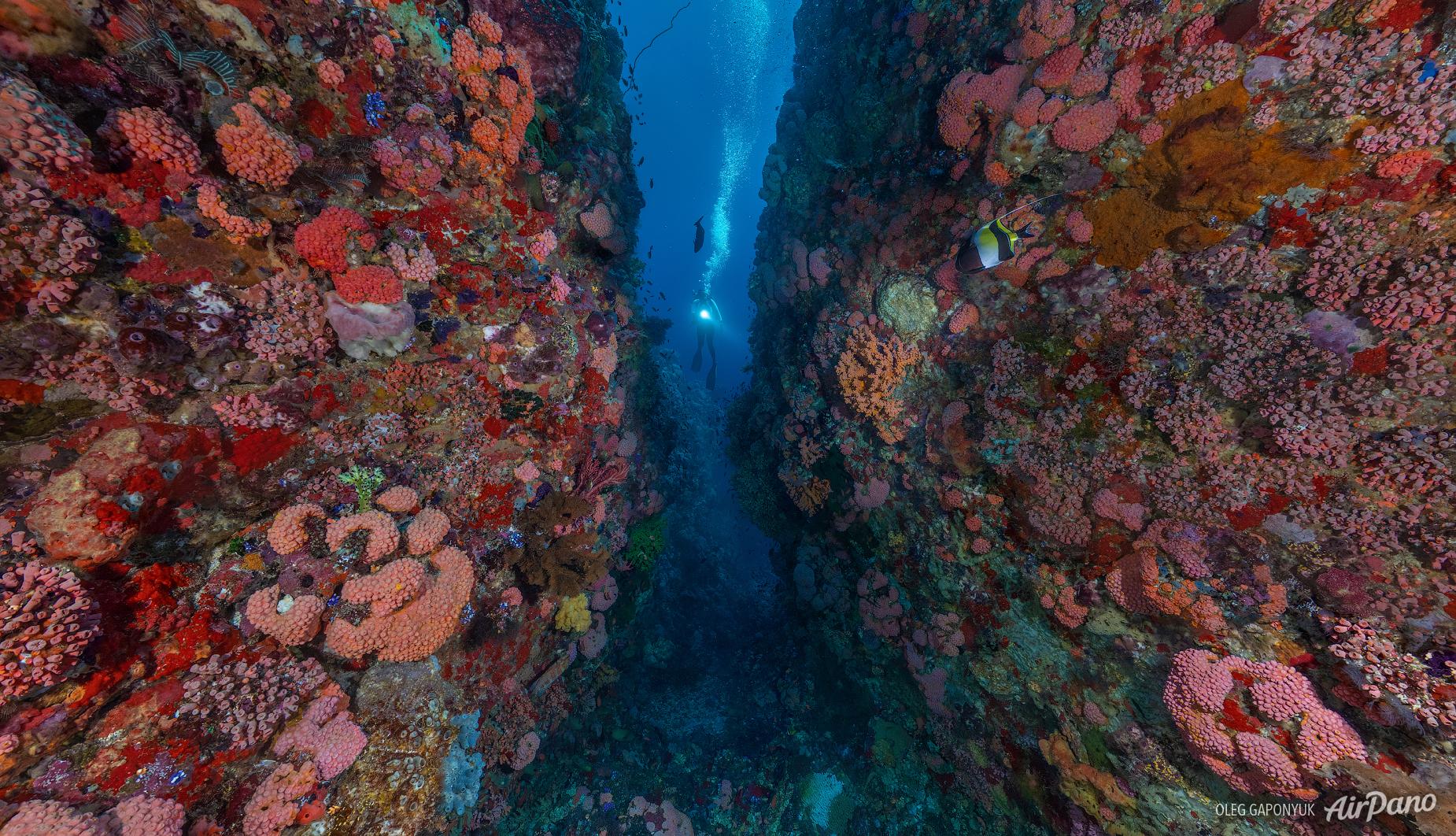

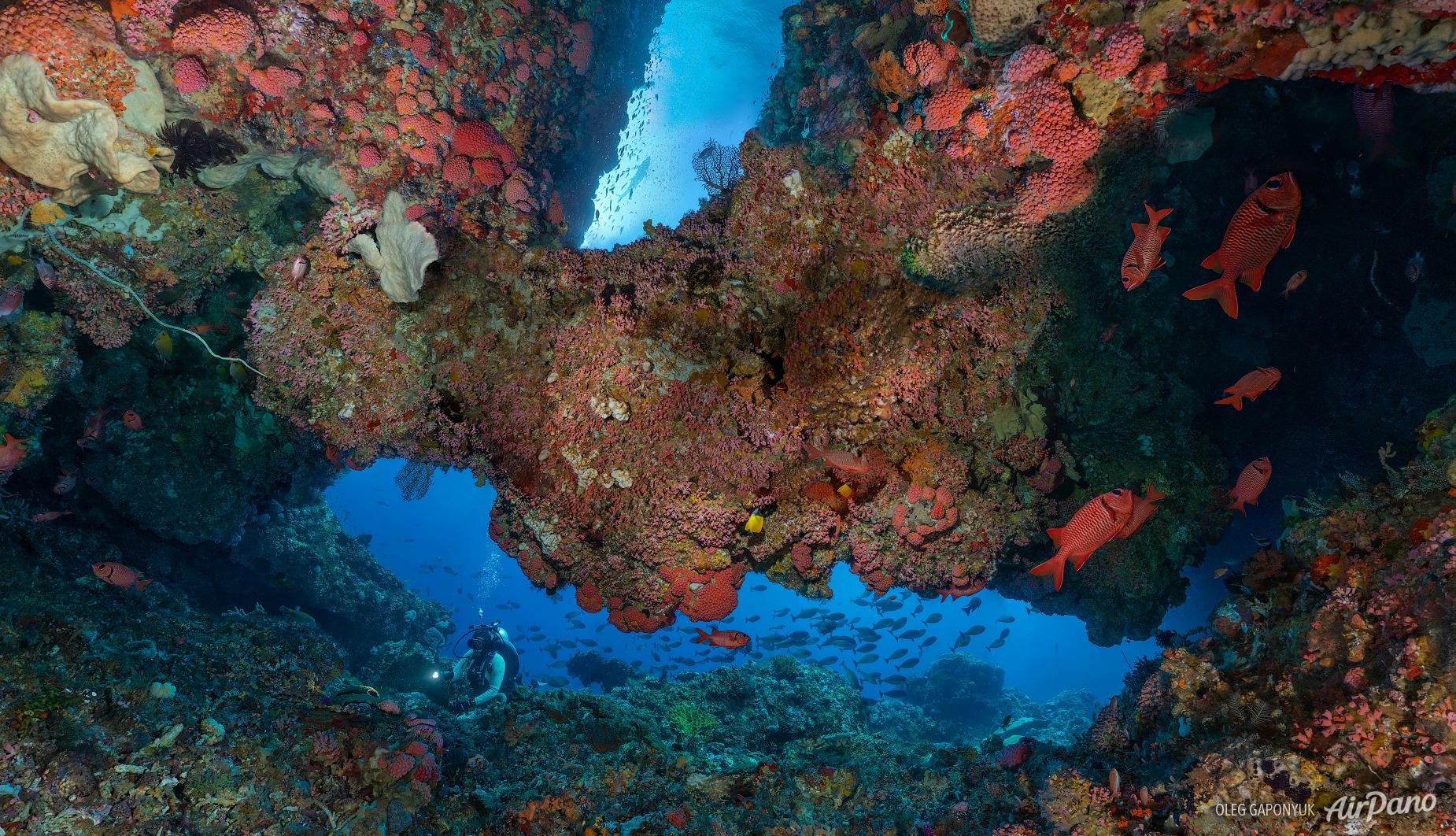

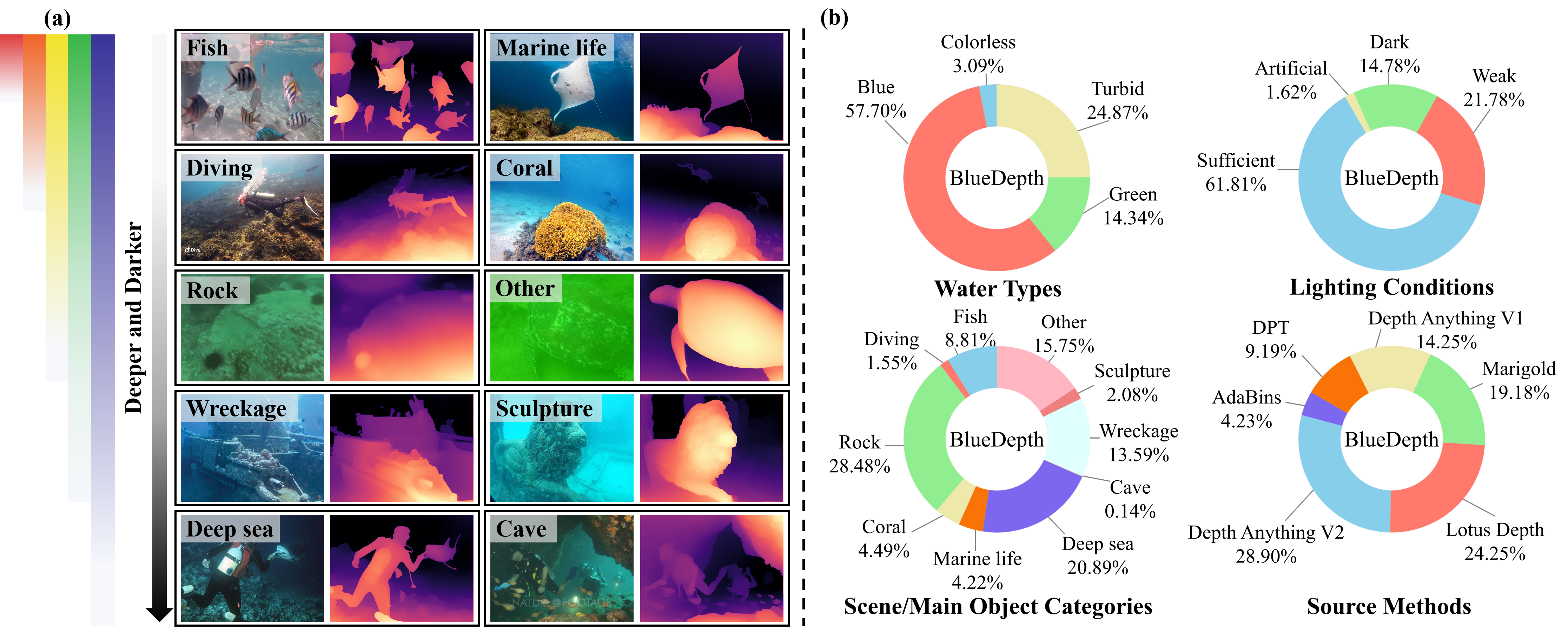

The example images and statistics of our constructed BlueDepth. (a) Example images of BlueDepth. (b) Statistics of BlueDepth. The BlueDepth dataset contains 38,162 underwater images with diverse scenes and various quality degradations.

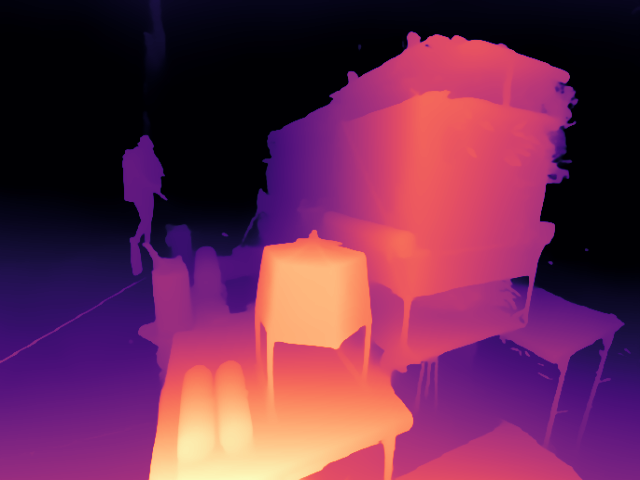

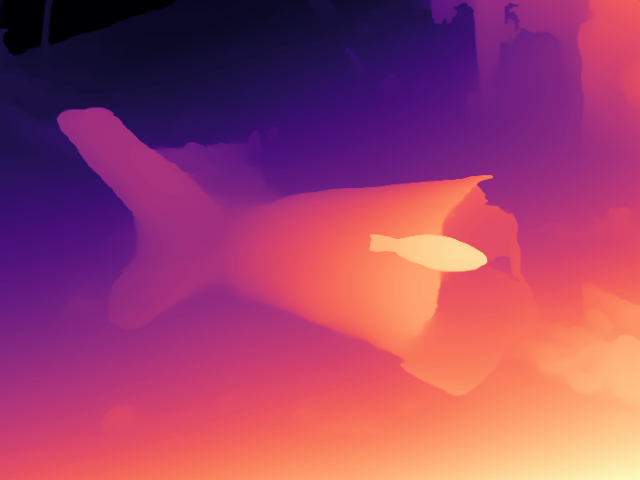

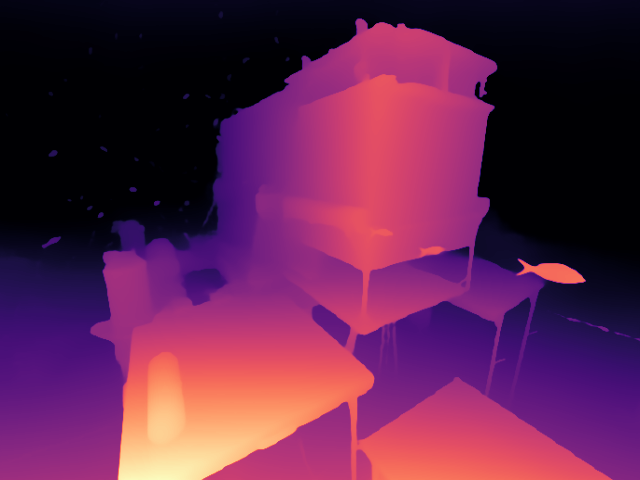

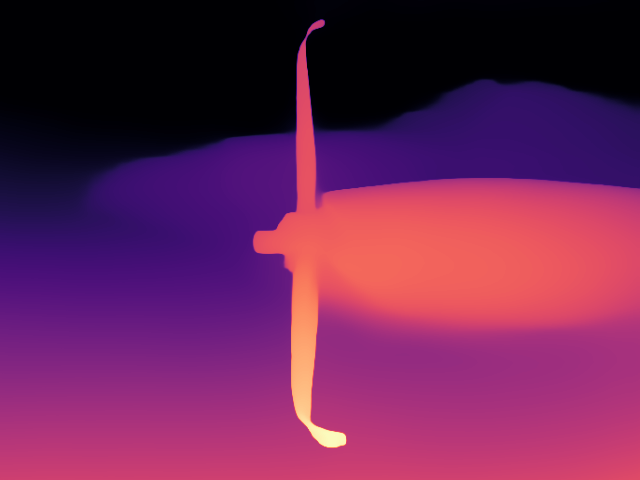

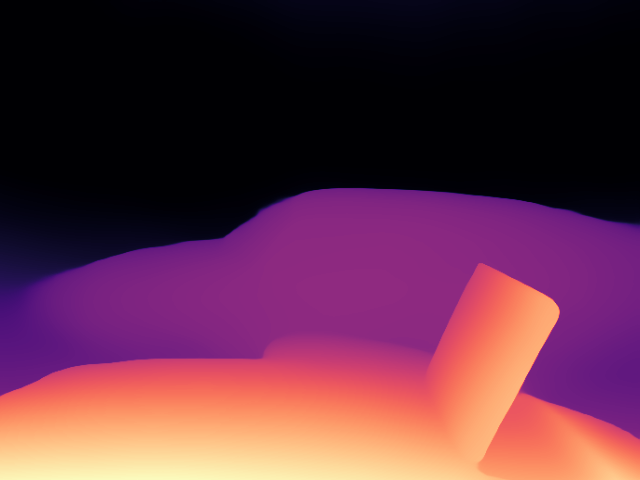

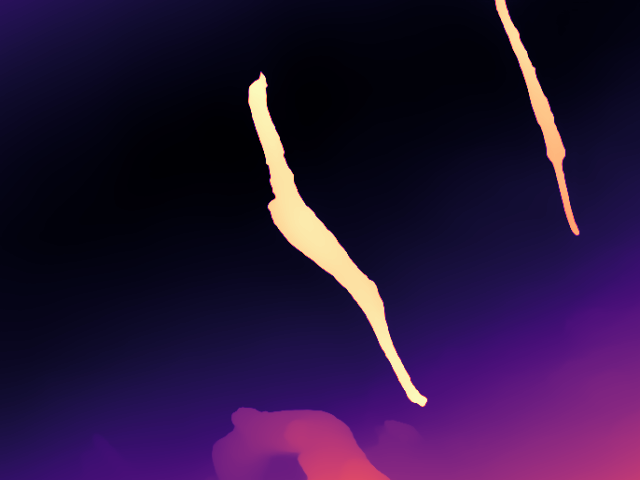

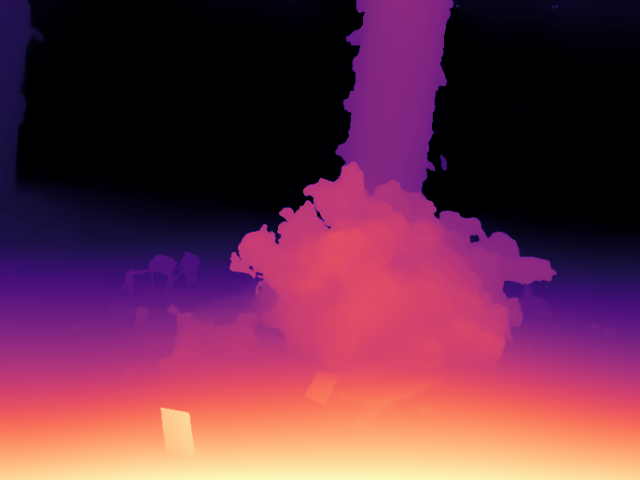

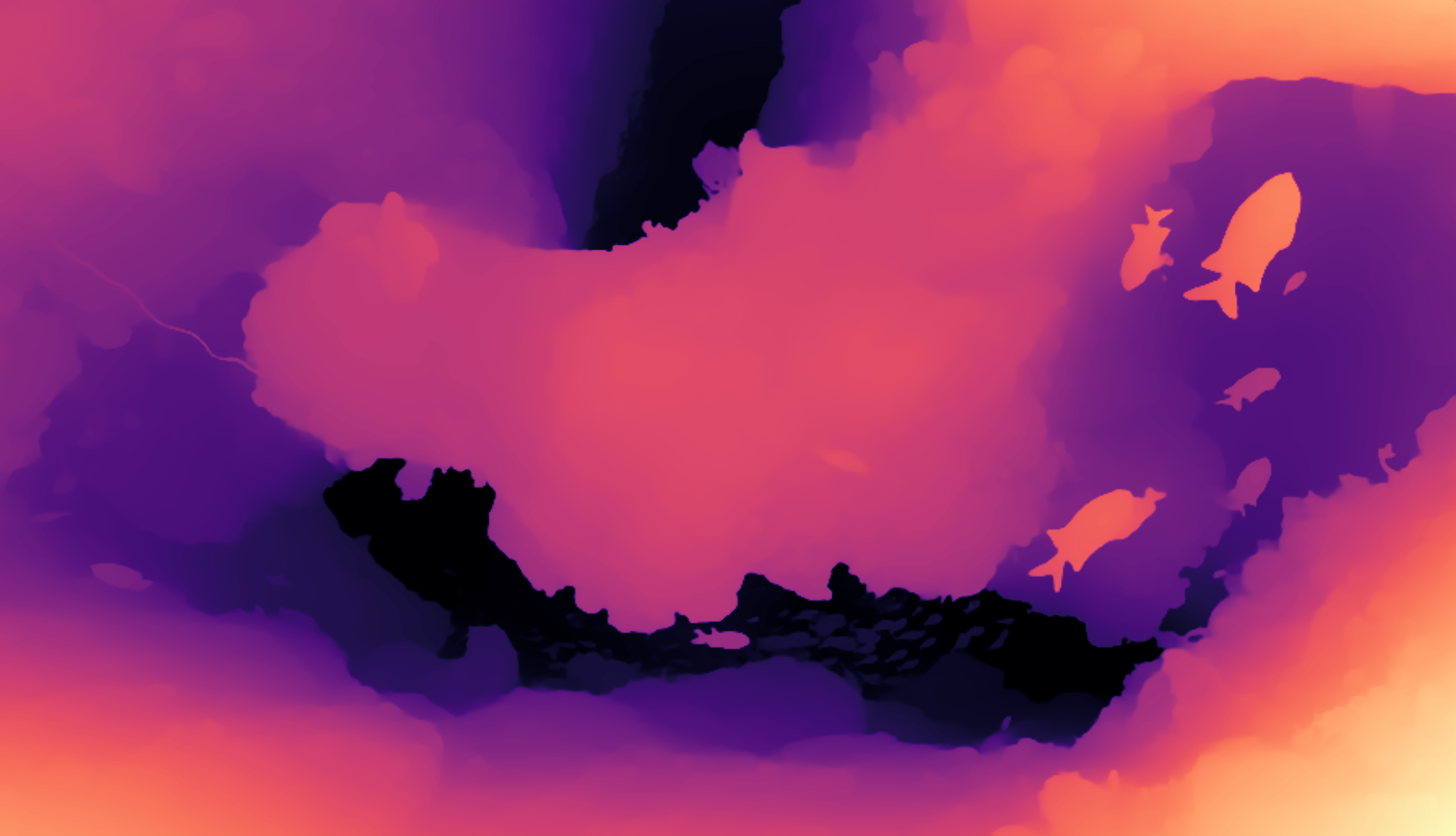

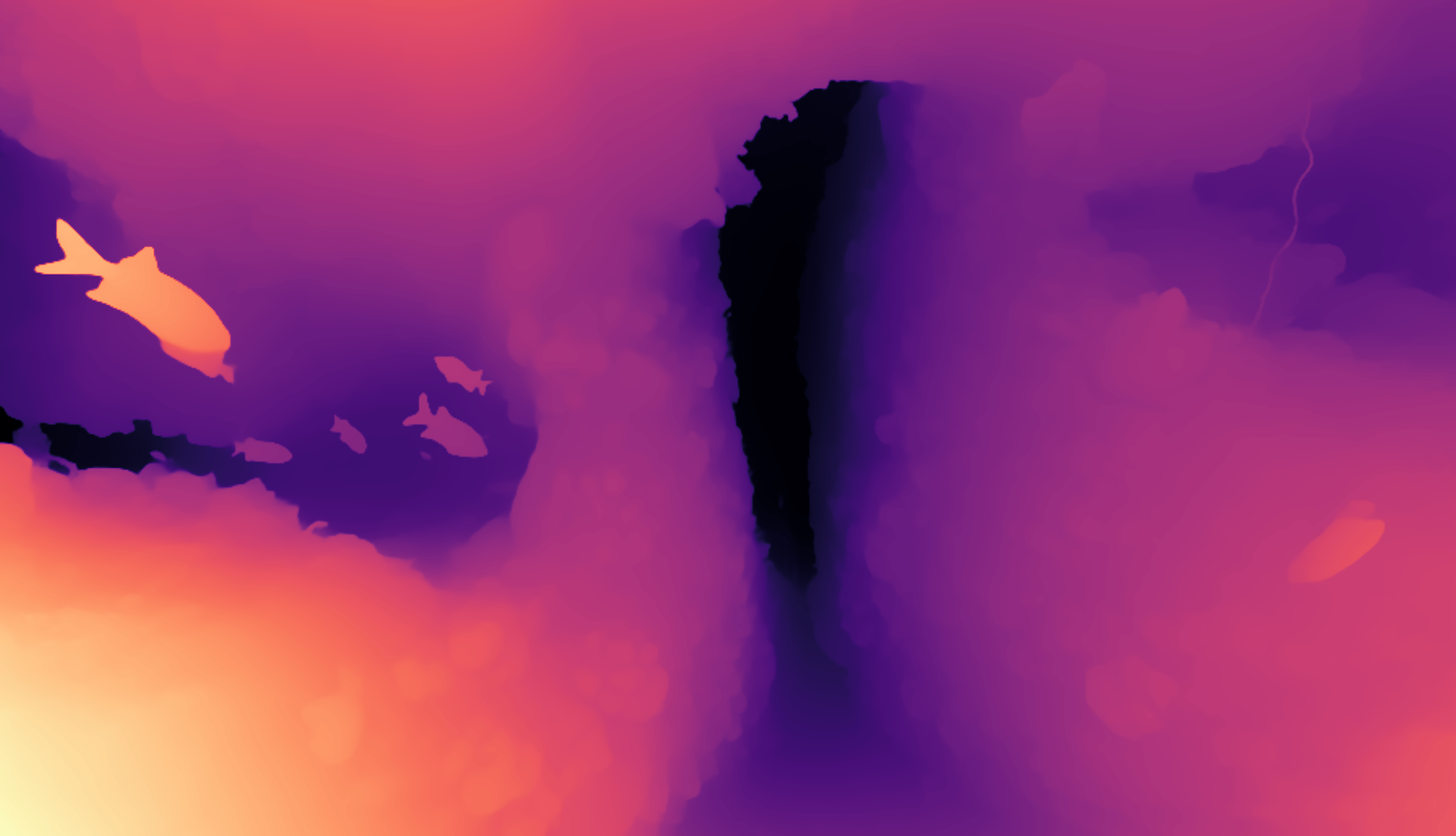

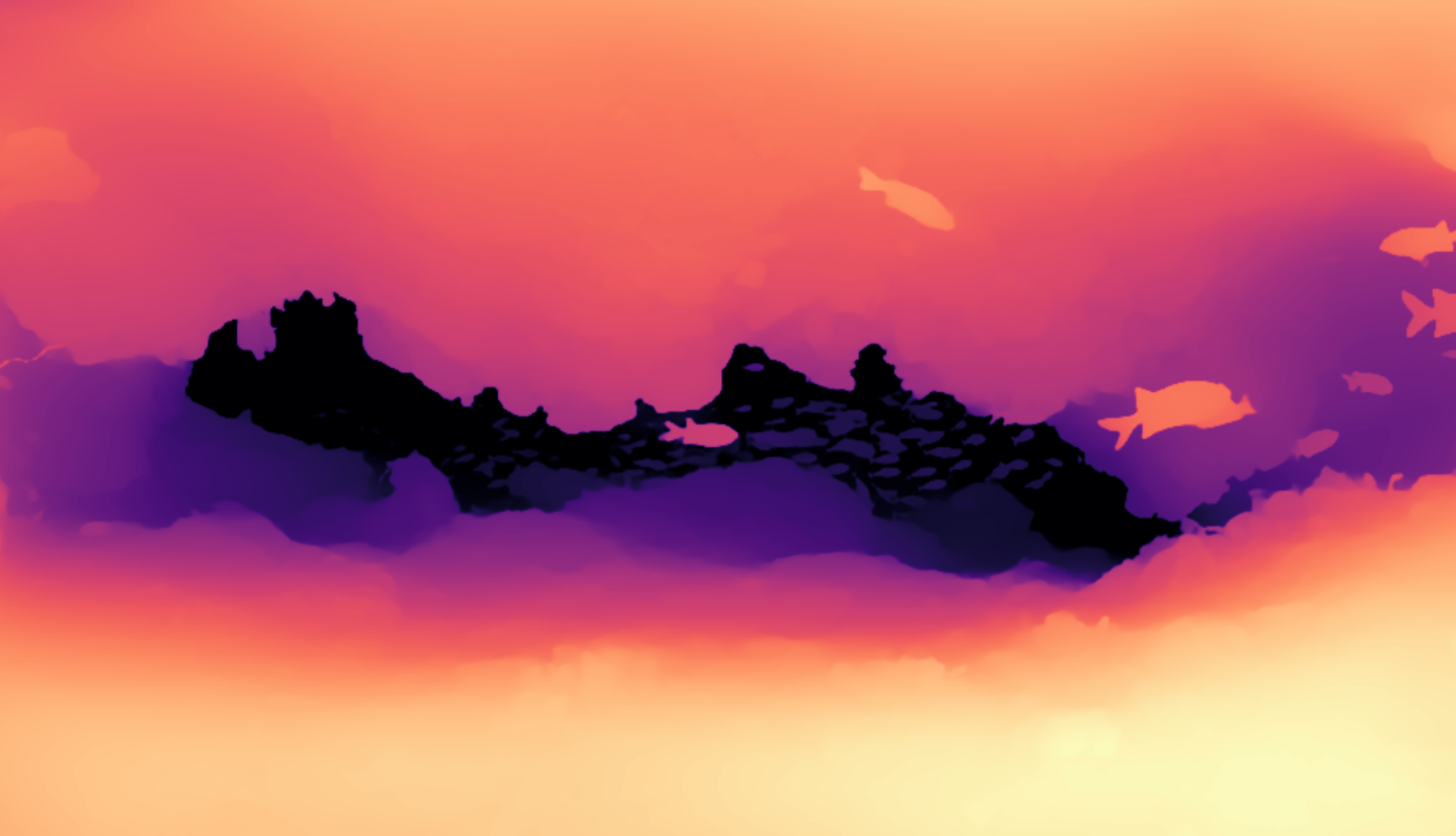

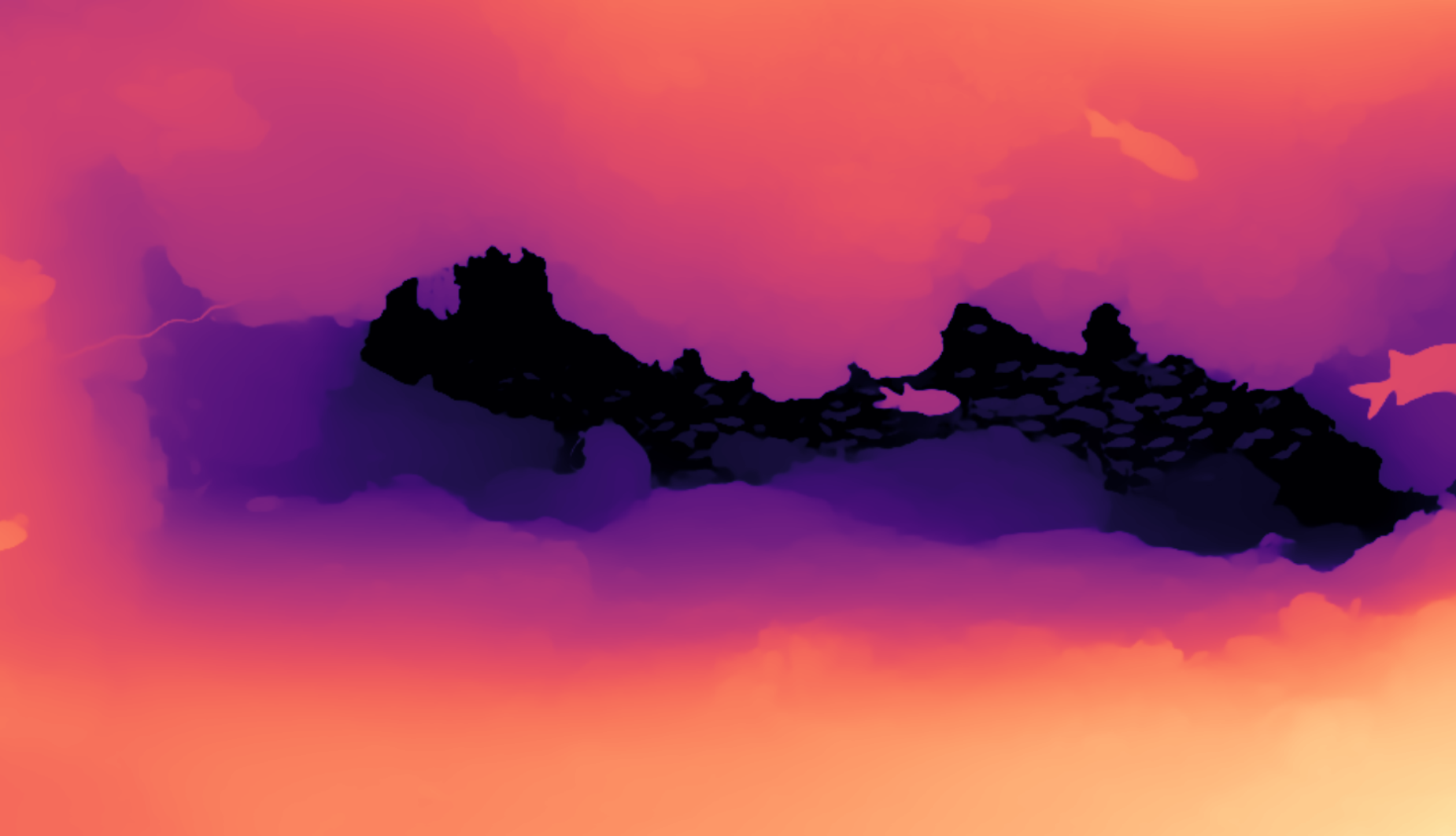

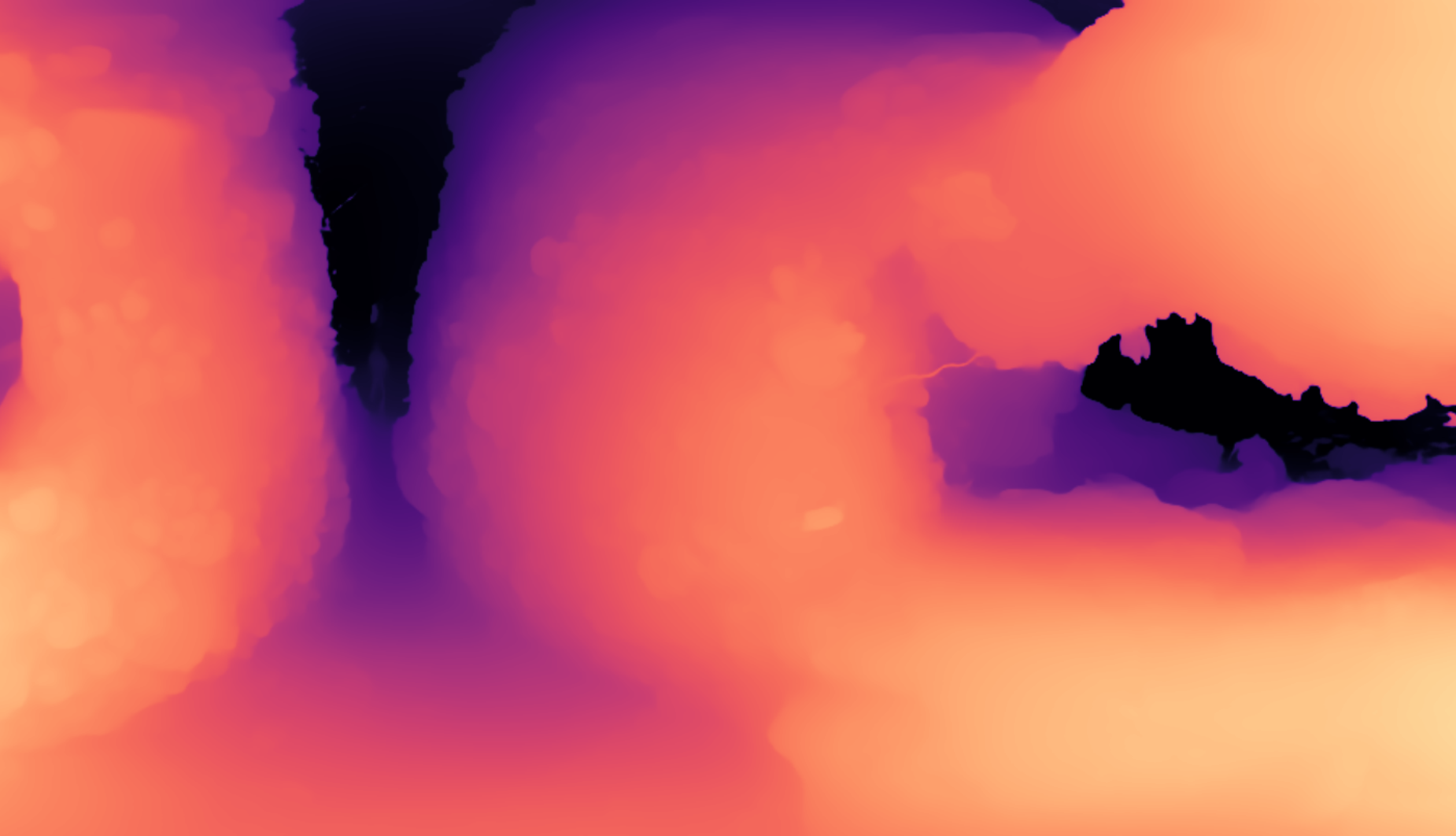

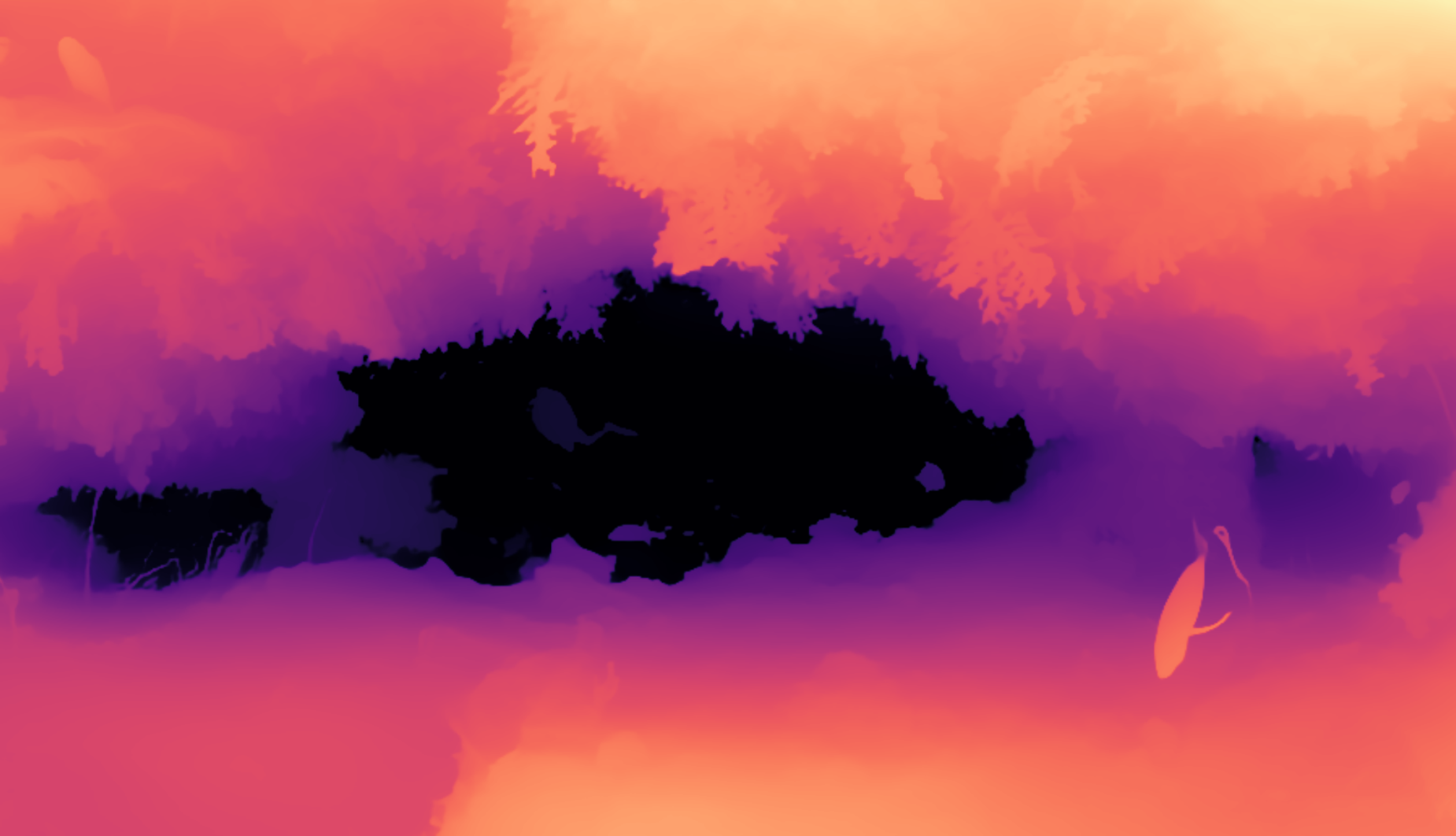

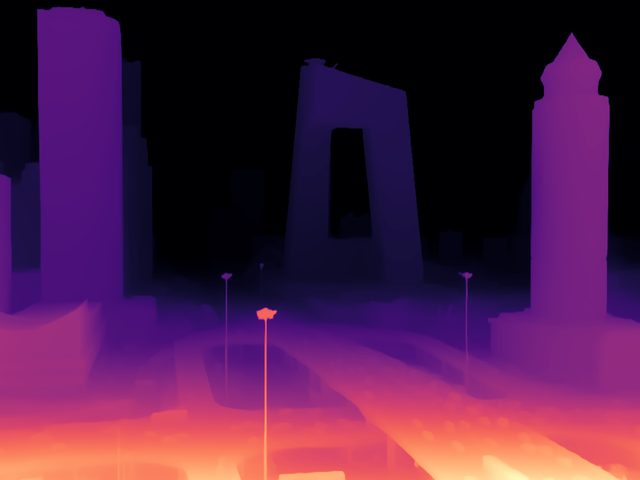

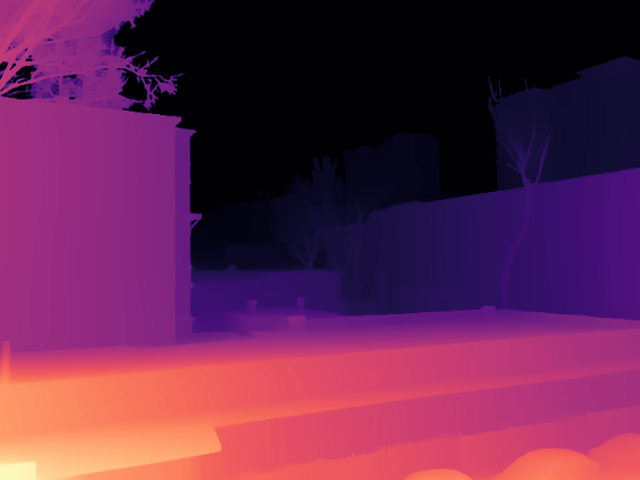

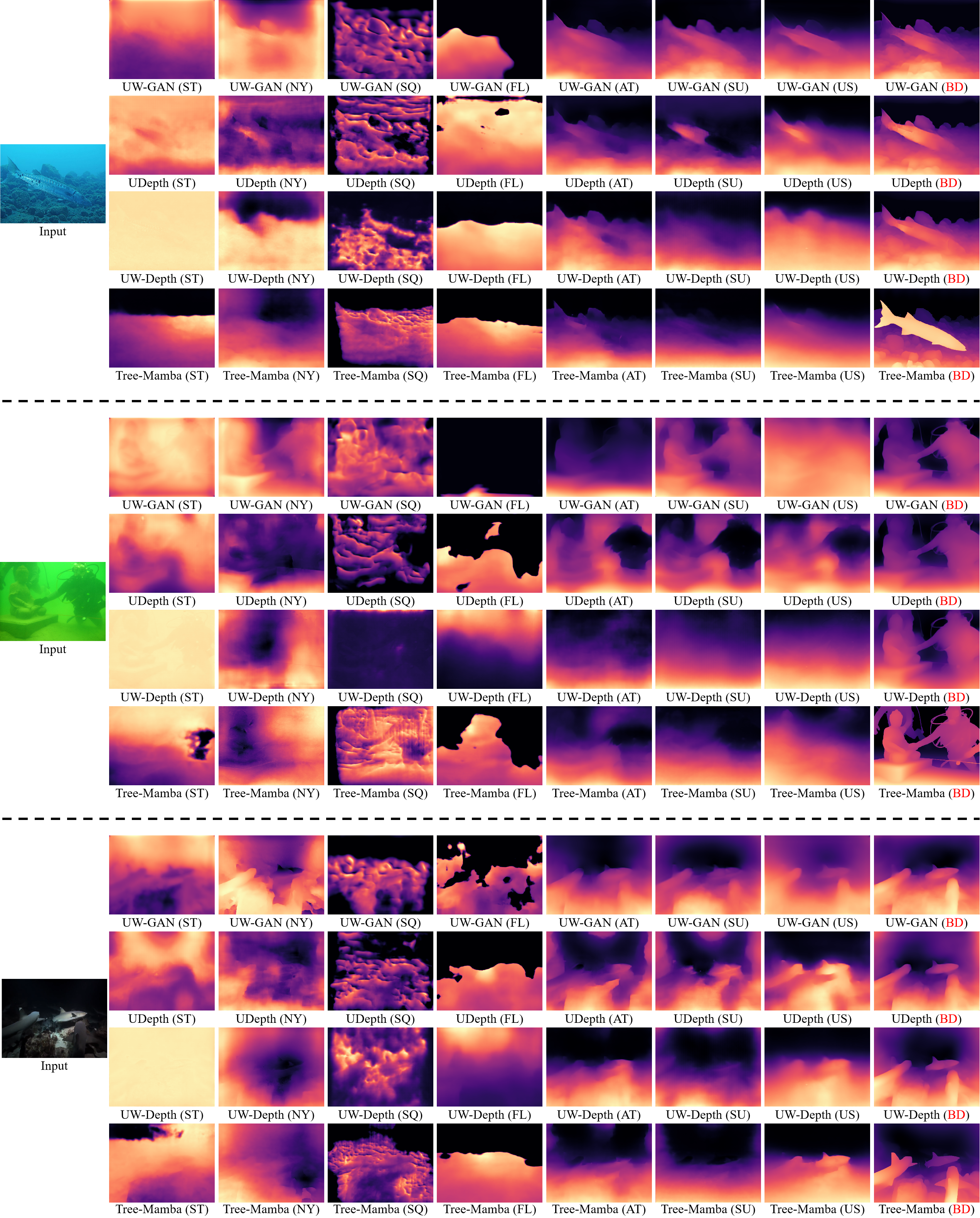

Visual results using our proposed BlueDepth dataset versus other datasets. ST, NY, SQ, FL, AT, SU, US, and BD denote the model trained on Sea-Thru, NYU-U, SQUID, FLSea, Atlantis, SUIM-SDA, USOD10K, and our BlueDepth, respectively. The depth results of UW-GAN, UDepth, UW-Depth, and Tree-Mamba are significantly improved by training on our BlueDepth dataset, meanwhile, our Tree-Mamba method yields better depth results than other competitors.

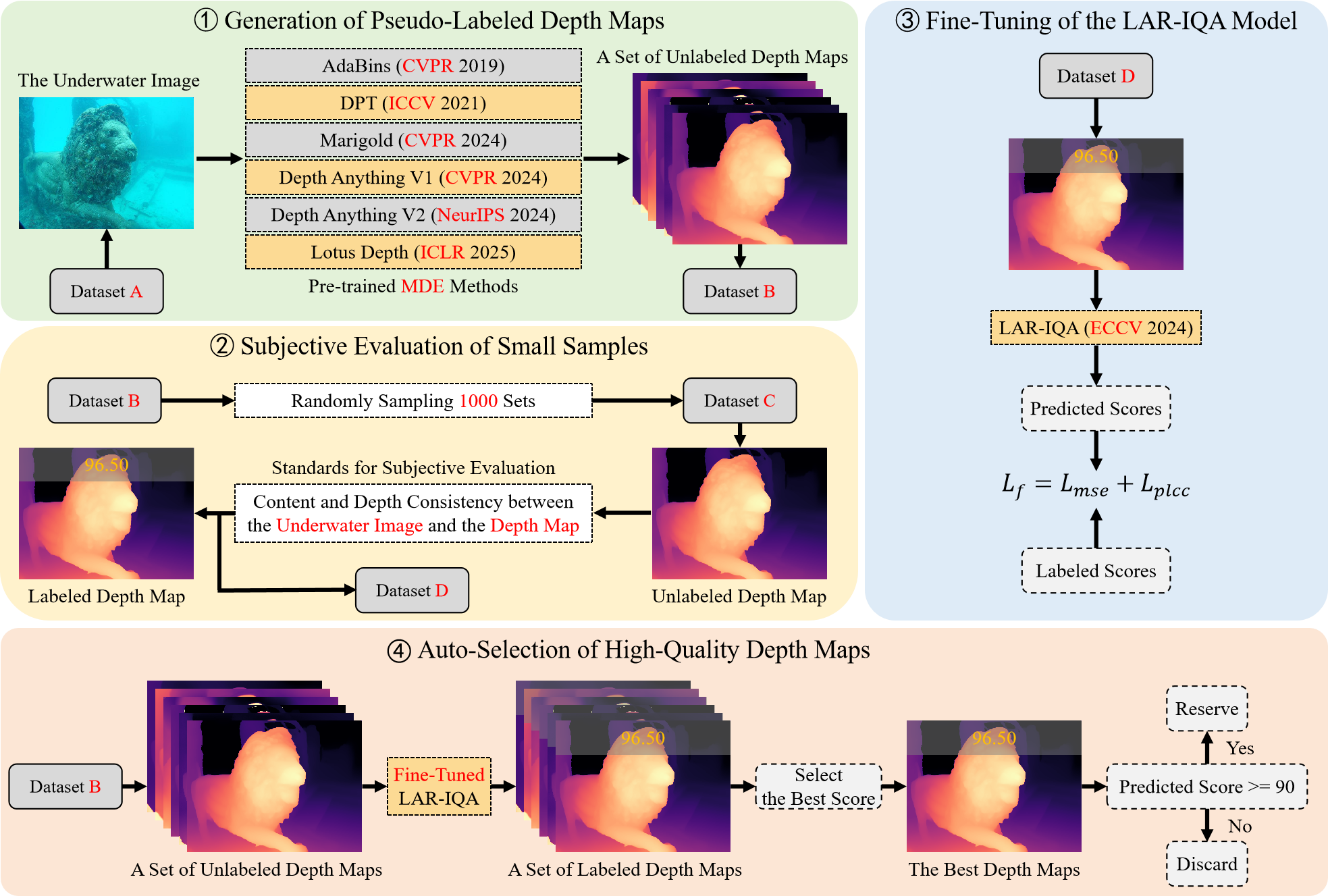

The schematic diagram of constructing our BlueDepth dataset. Dataset A contains all underwater images from the existing seven UMDE datasets (Sea-Thru, NYU-U, SQUID, FLSea, Atlantis, SUIM-SDA, and USOD10K), while Dataset B stores all pseudo-labeled depth maps for each underwater image from Dataset A. Dataset C is a subset randomly selected from Dataset B, while Dataset D is its subjectively labeled counterpart. The generation and selection of high-quality depth maps includes four main steps. Step 1 : Six state-of-the-art MDE methods (AdaBins, DPT, Marigold, Depth Anything V1, Depth Anything V2, and Lotus Depth) are used to generate pseudo-labeled depth maps from Dataset A. Step 2 : The depth maps are subjectively evaluated from Dataset C. Step 3 : The LAR-IQA model is fine-tuned with Dataset D. Step 4 : The fine-tuned LAR-IQA model is employed to automatically select the best depth maps from Dataset B.

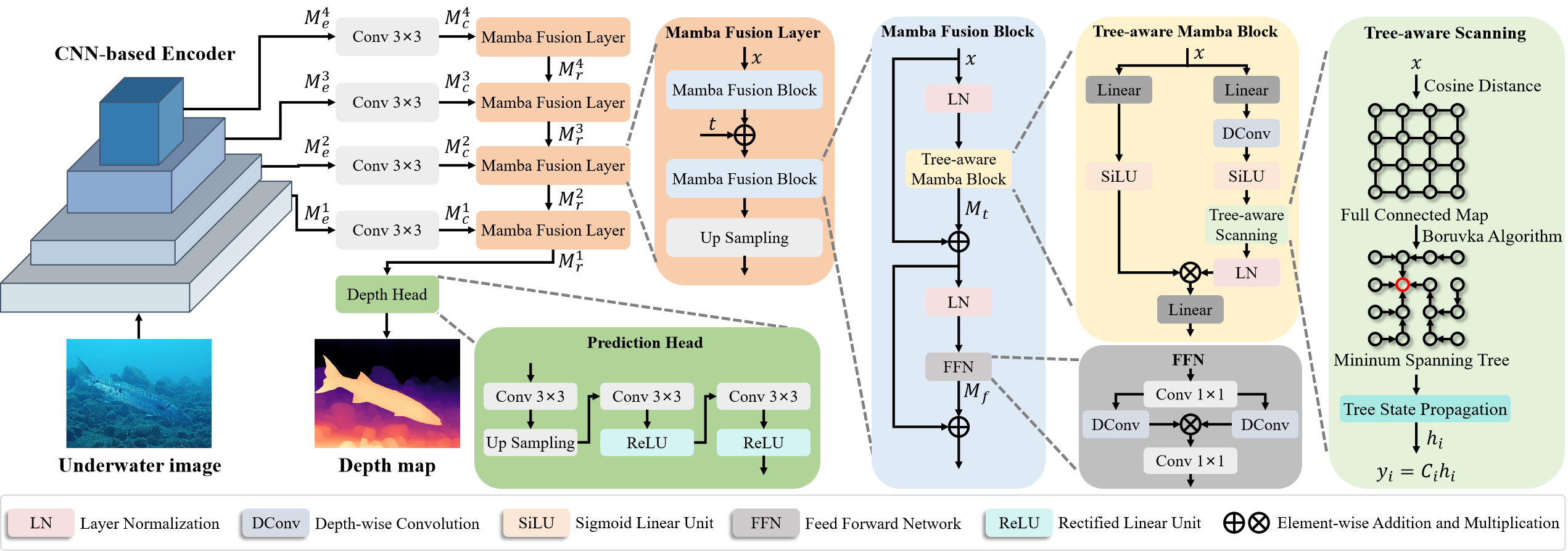

Overview of the proposed Tree-Mamba architecture. An underwater image first passes through a CNN-based encoder to produce multi-scale feature maps. These feature maps are then fed into 3×3 convolutional layers to reduce the feature channels. Next, Mamba fusion layers are used to refine the feature maps, where the proposed tree-aware scanning strategy adaptively constructs a minimum spanning tree with a spatial topological structure from the input feature maps and propagates multi-scale structural features via tree state propagation. Finally, the depth map is produced through the prediction head.

@article{zhuang2025tree,

title={{Tree-Mamba}: A Tree-Aware Mamba for Underwater Monocular Depth Estimation},

author={Zhuang, Peixian and Wang, Yijian and Fu, Zhenqi and Zhang, Hongliang and Kwong, Sam and Li, Chongyi},

journal={arXiv preprint arXiv:2507.07687},

year={2025},

}